Depth-Aware Pixel2Mesh

CS 231N Final Project

CS 231 (Deep Learning for Computer Vision) has probably been my favorite class at Stanford so far. It was incredibly well taught and also dove deeper into many of the topics I found to be especially interesting when doing my own ML/DL research throughout the years. Even as early as high school, I remember watching Andrej Karpathy’s CS 231N lectures on YouTube, and it was actually one of the reasons that got me excited to apply for Stanford in the first place.

The course consists of a wide variety of topics, but I wanted to more specifically share my final project, which I had the pleasure of working on with Julian Quevedo and Rohin Manvi. If you would like to read the original paper, you can find it here.

Background

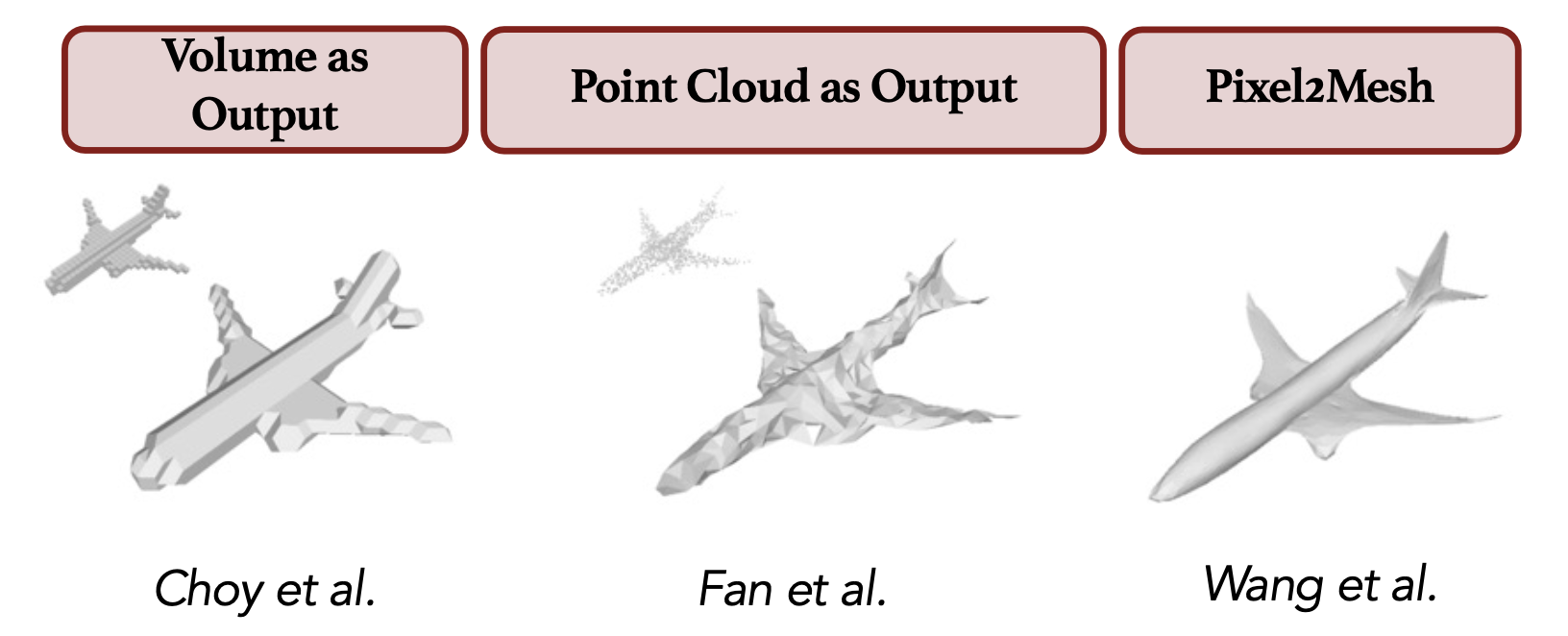

Current advances in the computer vision space have become increasingly accurate in object detection when given 2D inputs.

- Models like Mask R-CNN

- Instance and semantic segmentation However, the world around us lies in 3D, and there is still much work to be done moving forward in developing a computational understanding of 3D shapes and objects.

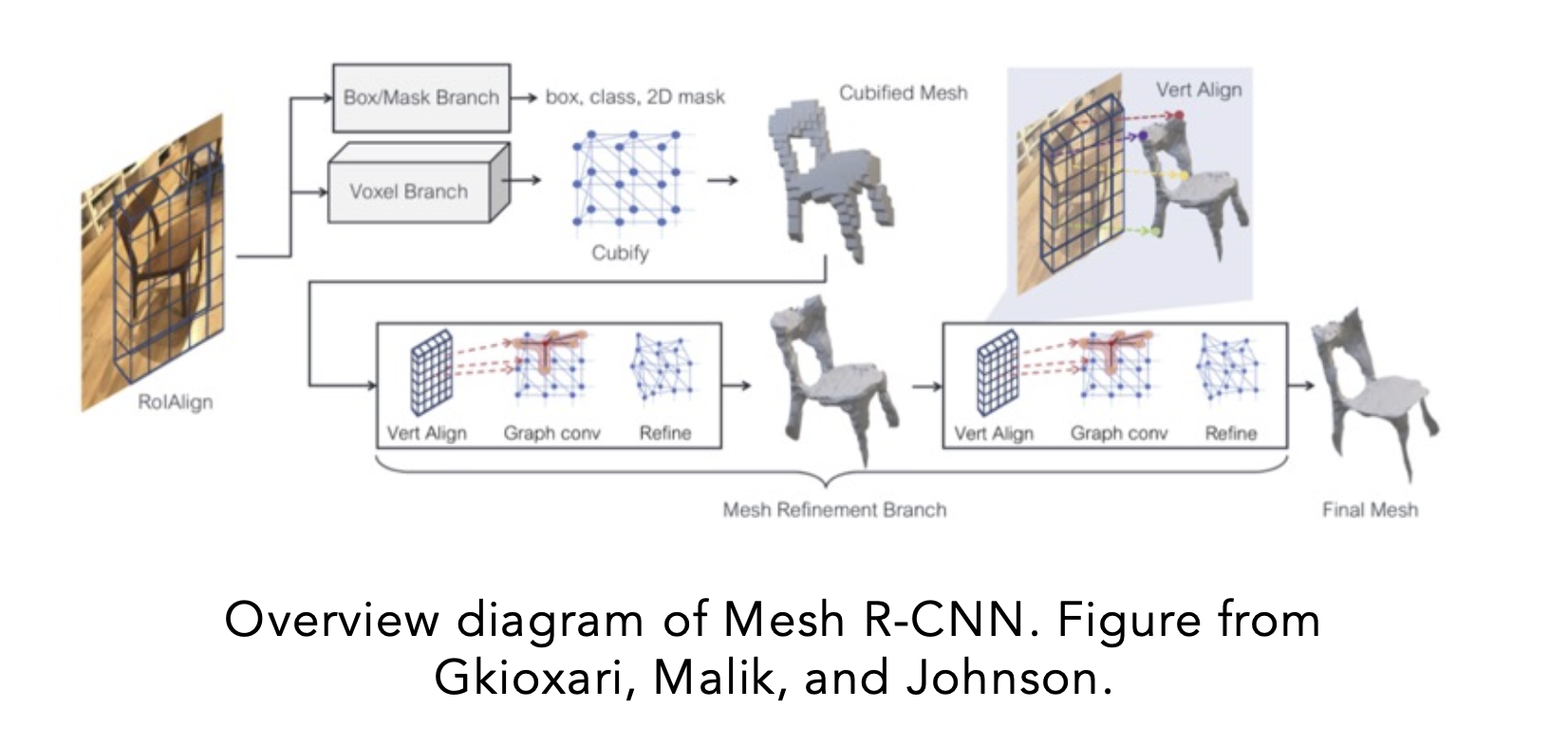

Mesh R-CNN is one major advancement in this space

- Constructs topologically accurate 3D meshes given a 2D RGB image using voxel representations

- Translates these voxel representations into a mesh using a GNN-based approach.

Proposed Solution

Current systems lack a major component of object recognition that we as humans use to perceive the world around us - depth.

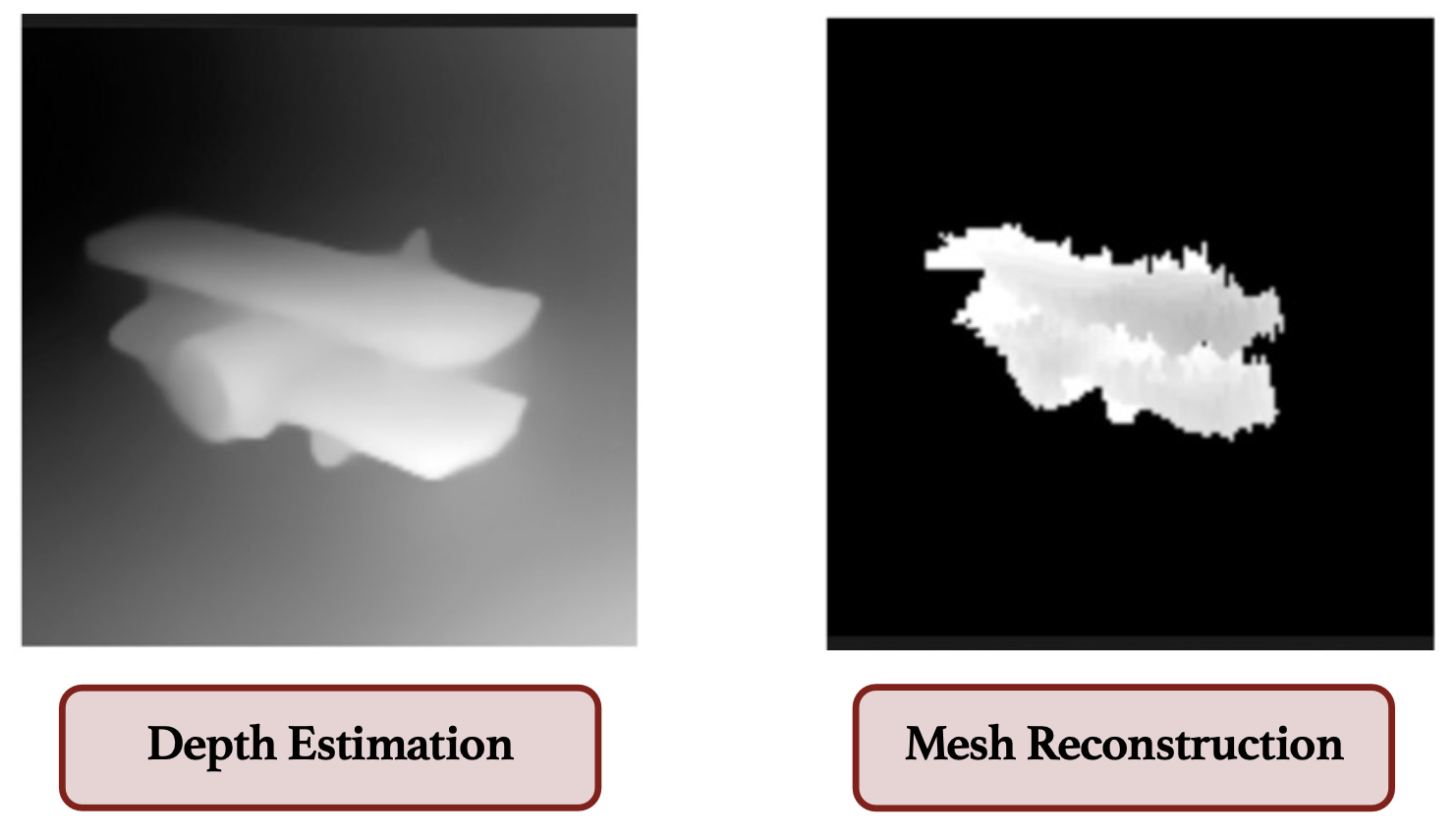

We aim to expand upon existing models by enhancing its performance through depth aware inputs and log potential changes in performance as a result. The depth images will be generated using the MiDaS depth estimation model.

Methodology and Results

Phase 1: Differentiable Rendering

In order to provide further supervision on our generated meshes, we considered augmenting the loss with differentiable rendering.

- Utilize a differentiable rasterizer to render a depth map of it the generated mesh

- Error between the rendered depth map and the “ground-truth” depth channel can be measured

This would allow our model to take further advantage of the RGB-D images by creating a mesh that has the same depth characteristics as the input depth map. In order to meaningfully compare the rendered depth maps with the ones from the input images we need the following

- The loss must be scale-invariant.

- Rendered depth maps must be from the same camera positions as the input images.

We discovered more difficulties and were unable to fully implement the scale-invariant depth loss.

- MiDaS hallucinates a ground plane beneath the ShapeNet renderings.

- The differentiable renderer simply marks all background points as −1

- MiDaS outputs an inverse depth map We hope to investigate solving both these problems simultanoeously in future work.

RGB-D Backbone and Mesh Refinement Head

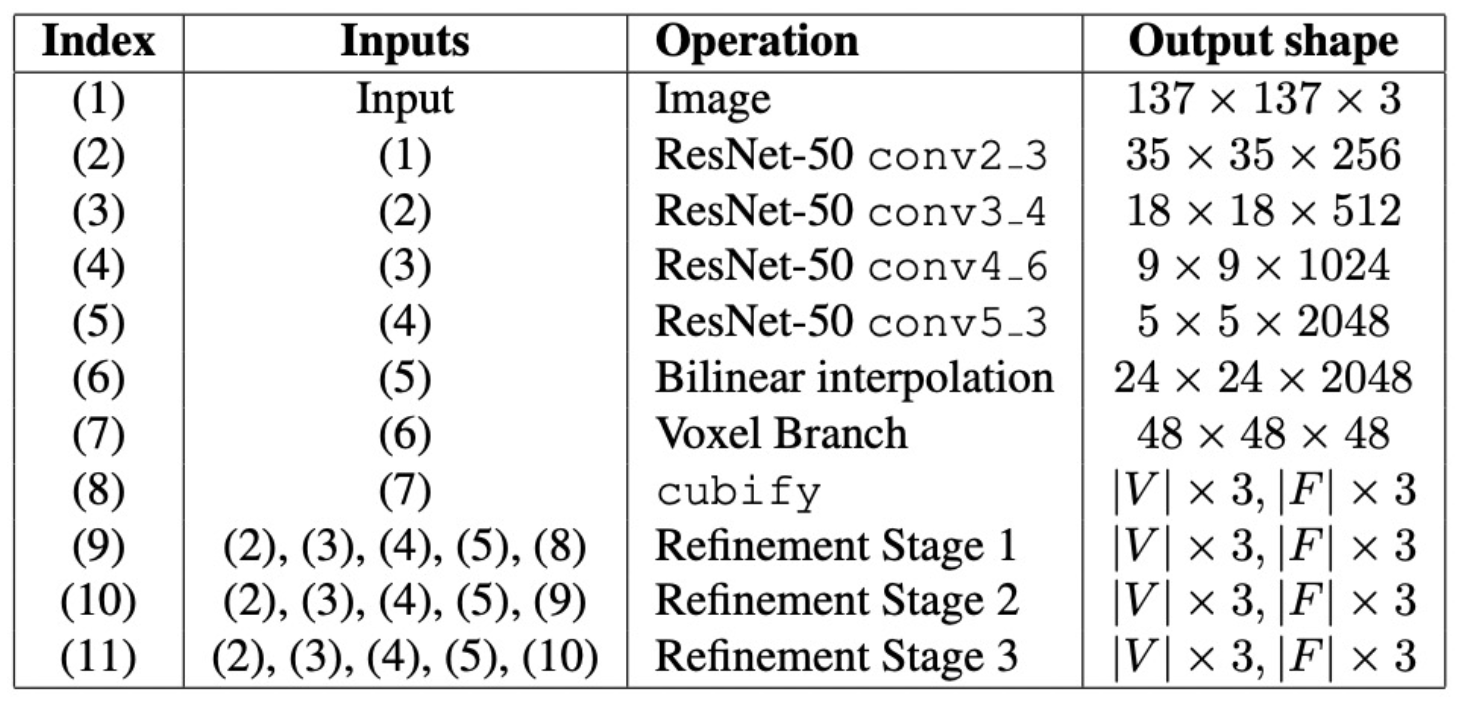

To allow Mesh R-CNN to take RGB-D images as input, we changed the first ResNet layer to learn four-channel filters instead of three- channel filters. We take advantage of pretraining by copying over the weights of for the first three channels and only train the fourth from scratch.

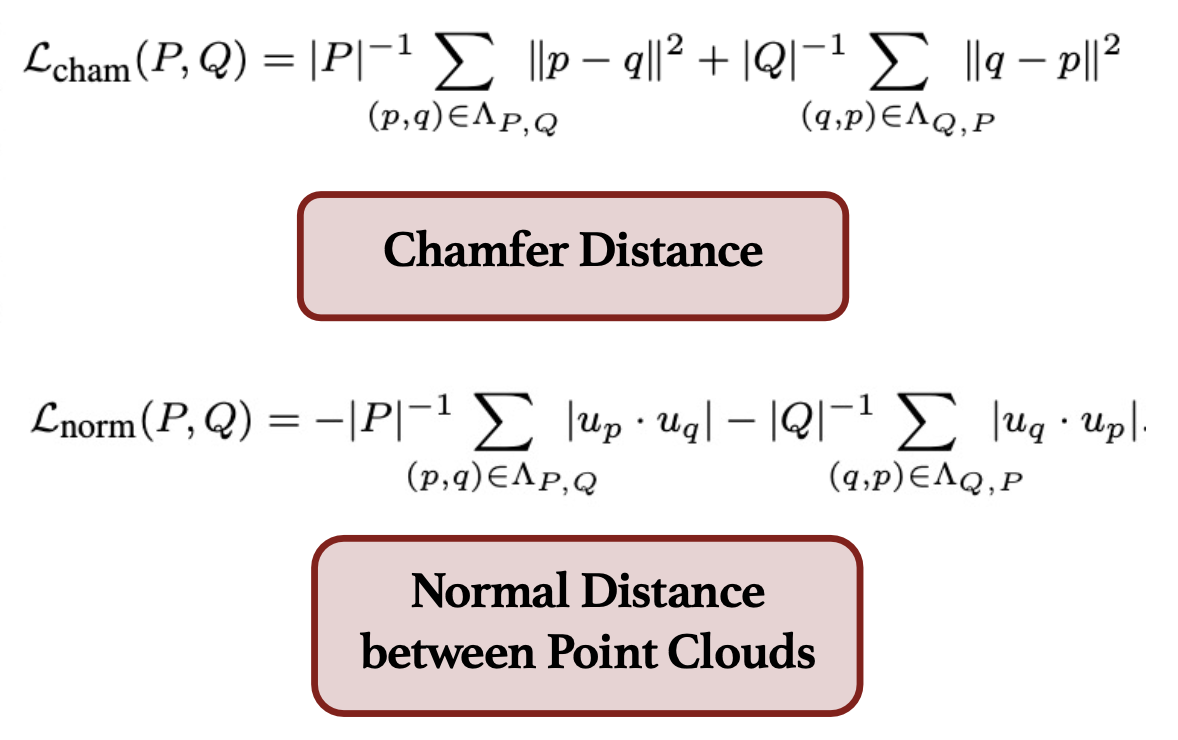

Chamfer distance and the normal distance are used as losses for the mesh. Pointclouds P and Q are sampled from the ground truth and the intermediate mesh predictions from the model.

Dataset and Features

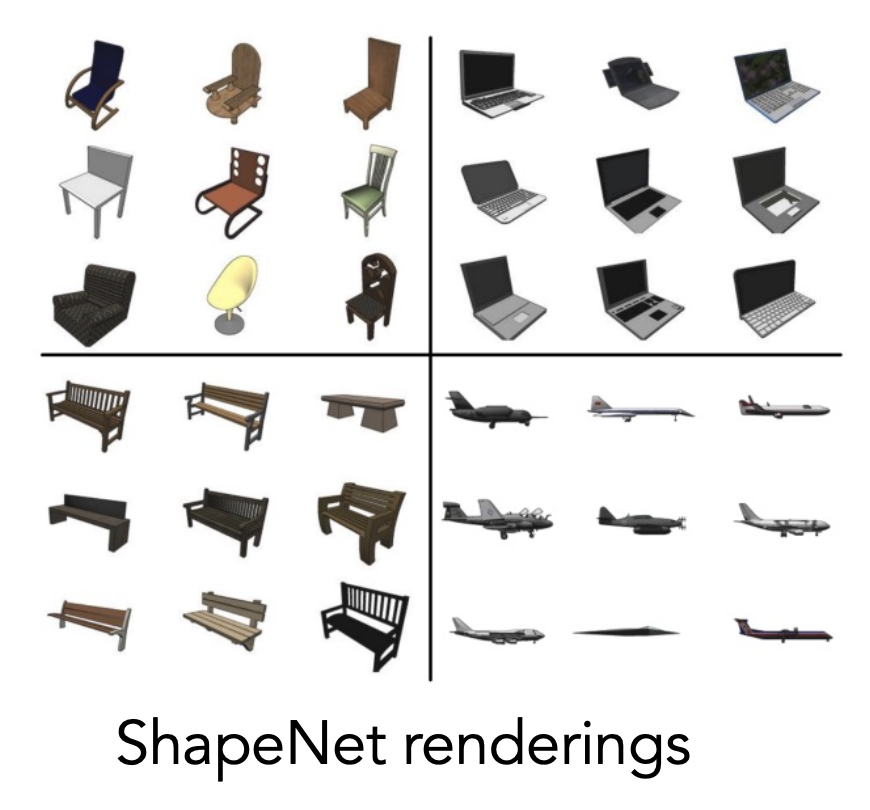

We trained our model on two datasets: ShapeNet Core (along with renderings from R2N2) and Pix3D. ShapeNet Core consists of over 50,000 3D meshes, which R2N2 provides rendered images of.

We use MiDaS to predict each image’s depth map which we stack to produce four-channel RGB-D images.

Results and Future Work

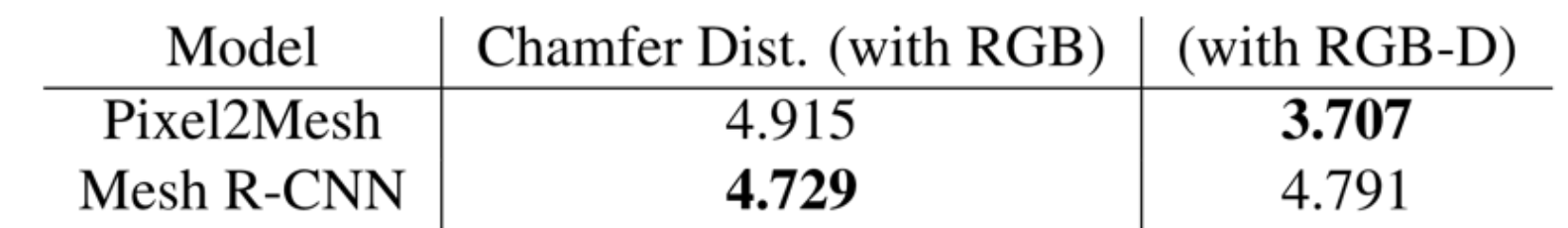

Adding depth resulted in a clear improvement for Pixel2Mesh, but seemed to make little difference for Mesh R-CNN.

A possible extension is to construct colored meshes

- Accurately represent textures and materials that appear in images

- Since we represent the meshes as graphs, our idea is to incorporate color information as an additional node feature

- Thus, as supervision for our color predictions, we need the meshes in the datasets to have vertex colorings

- To enable Mesh R-CNN to predict the color of each vertex, we plan to increase the dimension of the node features predicted by the mesh refinement stage

- Instead of predicting 3-dimensional features, we will predict 6-dimensional features, where the first three correspond to the vertex coordinate and the second three correspond to RGB values We also plan to continue the unfinished work on using differentiable rendering and the depth images during training. We hope to overcome the aforementioned roadblocks and hypothesize that due to the additional information fed in during training time, it is likely to outperform the results exhibited by the current depth-aware Pixel2Mesh.