Modifying MinBERT

CS 224N Final Project

CS 224N (Natural Language Processing with Deep Learning) was a wonderful class I took during the winter quarter of my junior year. Prior to taking 224N, I had already taken a bunch of other AI/ML/DL classes and this one is known to be one of the more approachable courses to begin with, so I did not find the class to be too challenging at all. It was extremely well taught and I really appreciated how the course staff modified much of the material to remain fairly topical (side note: this definitely one of Stanford’s greatest strengths as an academic institution). Much of the final portion of the class was entirely devoted to covering the cutting-edge (especially with ChatGPT released only a few months prior to this offering) - a large reason why this class had over 650 students enrolled.

While I cannot share the code for this project given Stanford’s Honor Code, I would love to share the work that went into the final project conducted by Govind Chada and myself. If you would prefer to read the whole paper, you can find it here.

Background

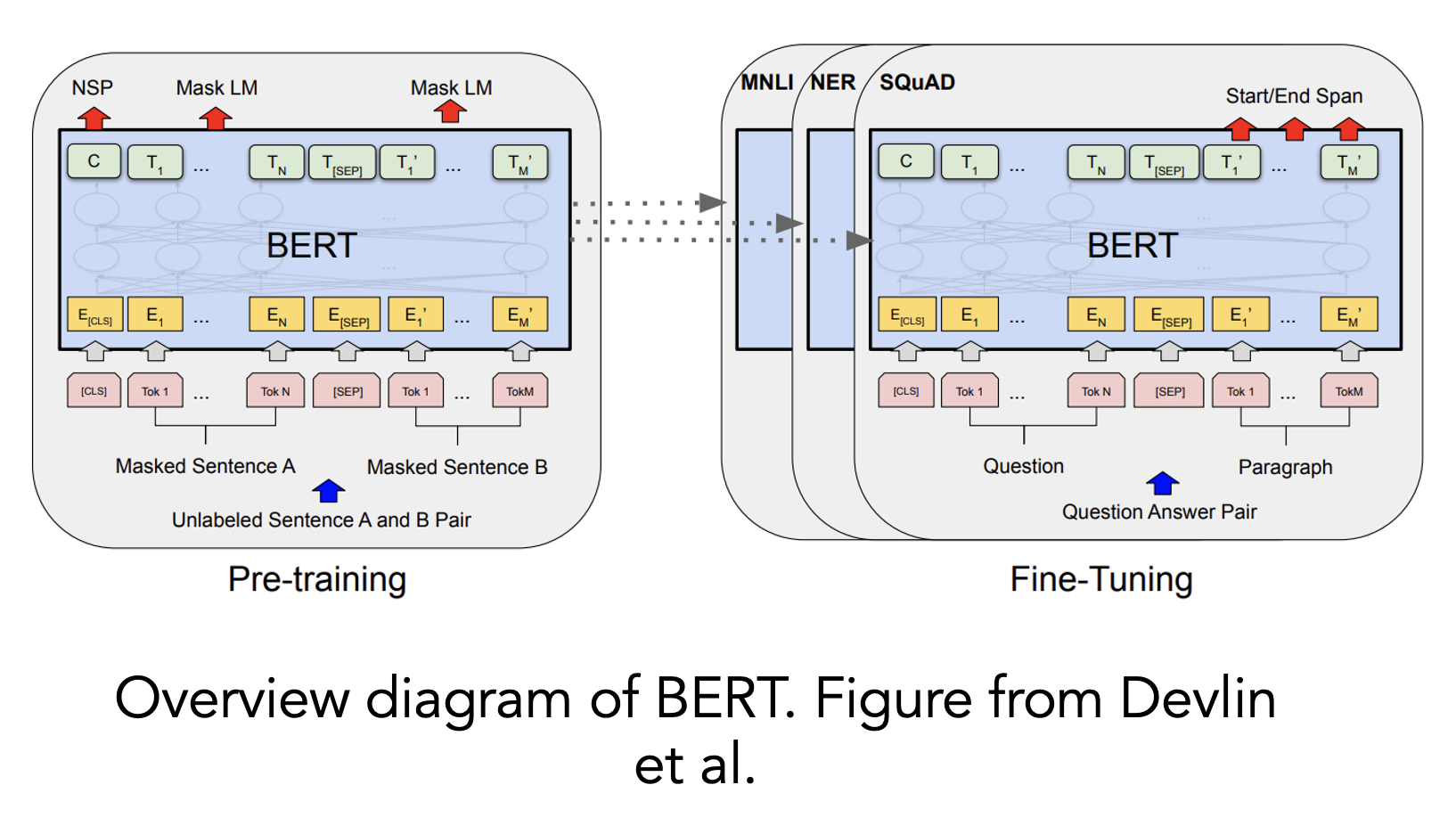

BERT, or Bidirectional Encoder Representations from Transformers, is an LLM based on the transformer architecture.

- Especially powerful when it comes to sequential textual data

- Training pipeline consists of pre-training on a large body of text and then fine-tuning it for a particular NLP task

In many domains such as healthcare and education, data collection is challenging, and training LLMs to perform well in these fields benefits from bootstrapping the performance on a specific task by utilizing learnings from other tasks where far more data is easily accessible.

Problem

BERT has been shown to perform well on a variety of natural language tasks. However, these models are optimized during training to perform well on a particular task. This inspires the central question that motivates this work: How can we perform multiple tasks with high accuracy?

For this project, we adapt BERT to solve 3 main tasks:

- Sentiment Analysis

- Stanford Sentiment Treebank dataset

- Phrases assigned labels of negative, somewhat negative, neutral, somewhat positive, or positive (0-4 respectively)

- Paraphrase Detection

- Quora dataset

- Binary labels describing whether a pair of questions are paraphrases of each other

- Semantic Textual Similarity

- SemEval STS Benchmark dataset

- Label measures degree of similarity between a pair of sentences (on a scale from 0 to 5, where 0 means unrelated and 5 means the pair has the same meaning)

Methodology and Results

Phase 1: Baseline, Gradient Surgery, and Cosine Similarity

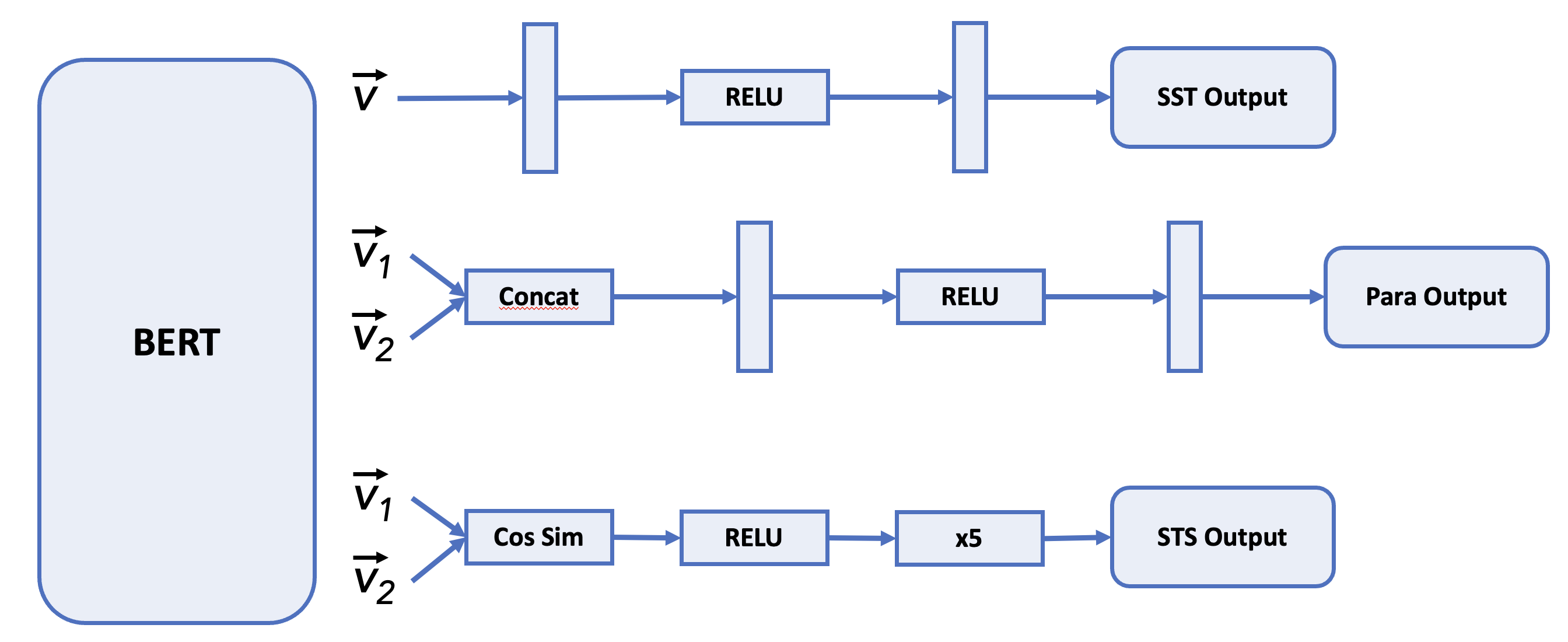

Baseline approach: BERT model with separate head for each task. Different loss functions used for each head.

- Sentiment analysis: Cross Entropy Loss

- Paraphrase detection: Binary Cross Entropy Loss

- Semantic textual similarity: Mean Squared Error

Experimentation to expand upon the baseline included:

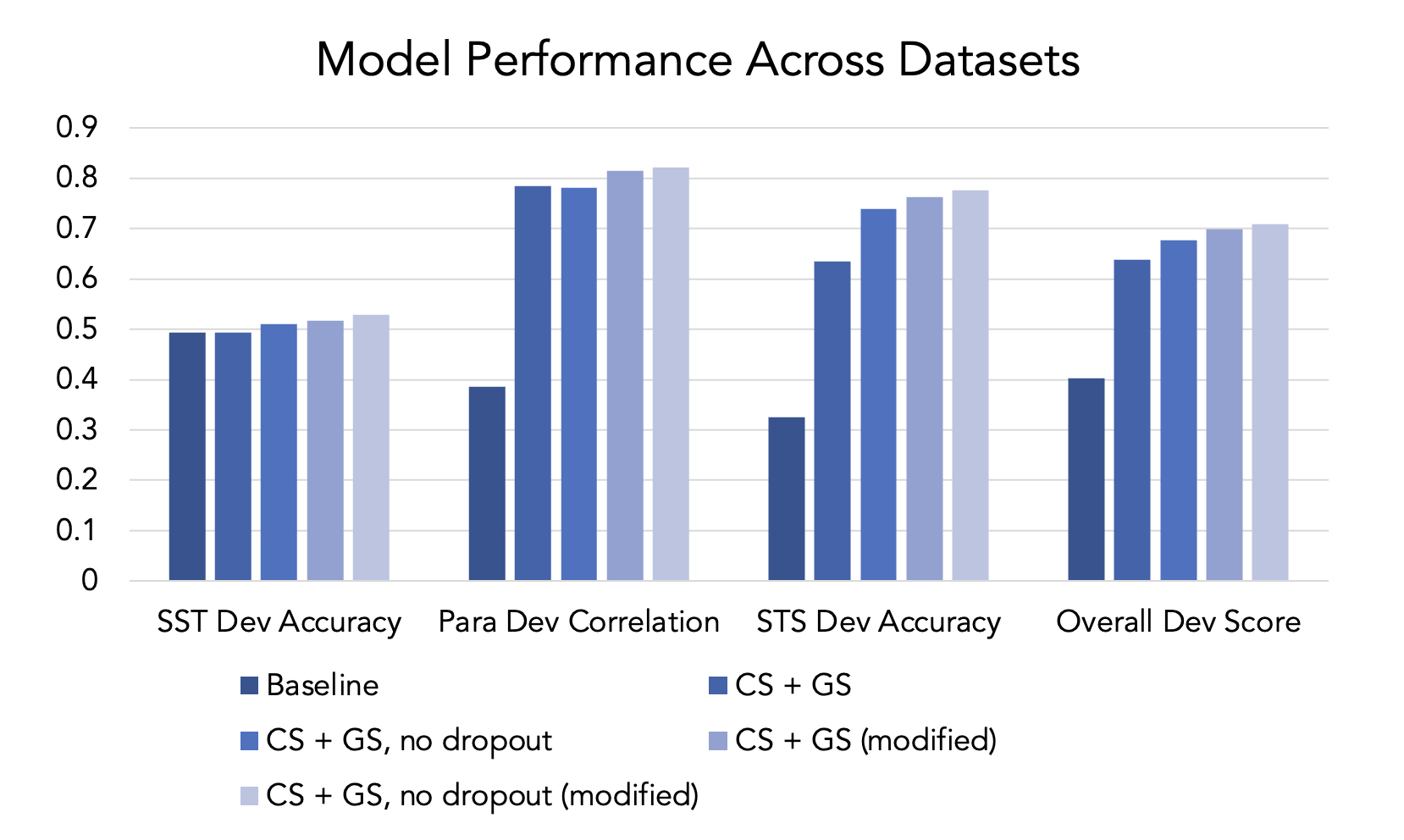

- Naïve gradient surgery (worse performance)

- Cosine similarity (CS) (significant improvement)

- CS with gradient surgery (GS) to counteract conflicting gradients (even more improvement)

- CS + GS and no dropout (best model during Phase 1)

Phase 2: Training Pipeline Modifications

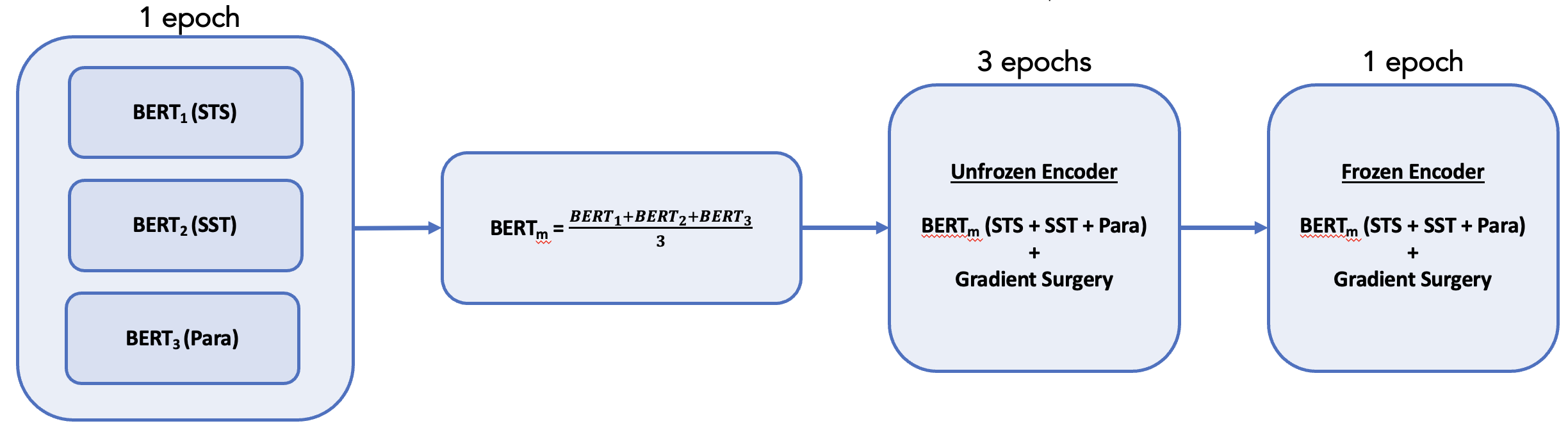

We then modified the approach taken in Phase 1 through a 3-step process

- Pre-train using 3 separate BERT models, one for each task

- Average outputs to be used as a stronger initialization for multitask finetuning

This methodology was coupled with the trials that were already shown to perform the best in Phase 1, namely cosine similarity and gradient surgery, with and without dropout.

Analysis

We found the best performing model consists of our training method in combination with gradient surgery and cosine similarity

- Naively applying gradient surgery negatively impacts results due to strongly conflicting gradients between the tasks

- Cosine similarity brings the tasks more in line with each other and allows for better multitask model performance

- This also constrains the output of the head to be between 0 and 5, rather than letting the model learn this

- Using both gradient surgery and cosine similarity together boosts overall performance across the tasks, and combining this with our training scheme allows for even greater performance

- This shows the importance of a good initialization point to begin finetuning from and an even more efficient pretraining process could probably improve the results further

- We also found that the model begins to overfit after only a few epochs of finetuning but, removing dropout led to improved performance on the dev data

Conclusion

Throughout the course of this project, we were able to empirically determine certain modifications to our baseline multitask BERT implementation that resulted in stronger task-specific and overall performance. Our best model used single task pretraining to provide a strong initialization for multitask finetuning, combined with CS, GS and removing dropout regularization. While experimental success was logged using the dev datasets, our overall test score was found to be 0.702.

Limitations include:

- Universally low performance on the sentiment classification task, pulling down the overall score greatly

- Cosine similarity only benefitted the STS task, and we did not find any beneficial task-specific approaches for the Para and SST tasks

One future step in involves trying an optimization based meta-learning approach such as MAML. We saw having a good initialization point before finetuning improved downstream task performance, so with the construction of more related tasks for meta-training, we hypothesize this may boost performance across the board.